-

Nexunom

Marketing firm providing different services and products.

-

Allintitle

Premium keyword research tool and long-tail keywords finder.

-

Review Tool

Review management tool used by local businesses to get more reviews.

-

Tavata

Two-way text-based website chat widget with Pay-Per-Text payment structure.

Robots.txt Checker

Check, Validate and Test Robots.txt File of Any Site

Plus Gain Access into Insights From 1056000 Analyzed Websites

Test and validate your robots.txt file for syntax errors and page and resources (CSS, JavaScript, and images) accessibility. Our interpreter makes it easy to understand any robots.txt file.

Stats Summary

The stats section on our robots.txt testing tool represents the updated results of our ongoing study of analyzing robots.txt files of various websites with the primary goal of finding the most blocked bots (either AI bots or non-AI ones.)

This analysis includes a backlog of 1 million top-traffic websites whose robots.txt files will be analyzed on an ongoing basis, in addition to domains manually analyzed through our robots.txt testing tool by the users.

Our dataset takes into account only the most recent analysis of each domain, ensuring no domain is represented more than once. Check out the Universal Web Crawler Blocking Report on Saeed's website to learn more about the methodology.

The first chart (stacked bar chart) reports the categories of the sites that have blocked each bot in their robots.txt files. Analyzed sites are categorized into 18 different categories. By hovering over each color, the category's name, the bot name, and the number of sites blocking it will be shown on a tooltip. We update our Stacked chart on a monthly basis.

The stacked chart only represents the sites we have analyzed from our top-traffic 1 million datasets and does not include the domains our users analyze. Therefore, it is more representative of which web crawls the top-traffic sites block via their robots.txt file.

The second bar chart and the table below represent the most frequently blocked bots across the analyzed websites (1056000 websites). The Y-axis of the bar chart indicates the number of websites that have universally blocked a specific robot, and the X-axis represents the bot name.

| Bot Group | Occurrences |

|---|

Why did we create this robots.txt checker?

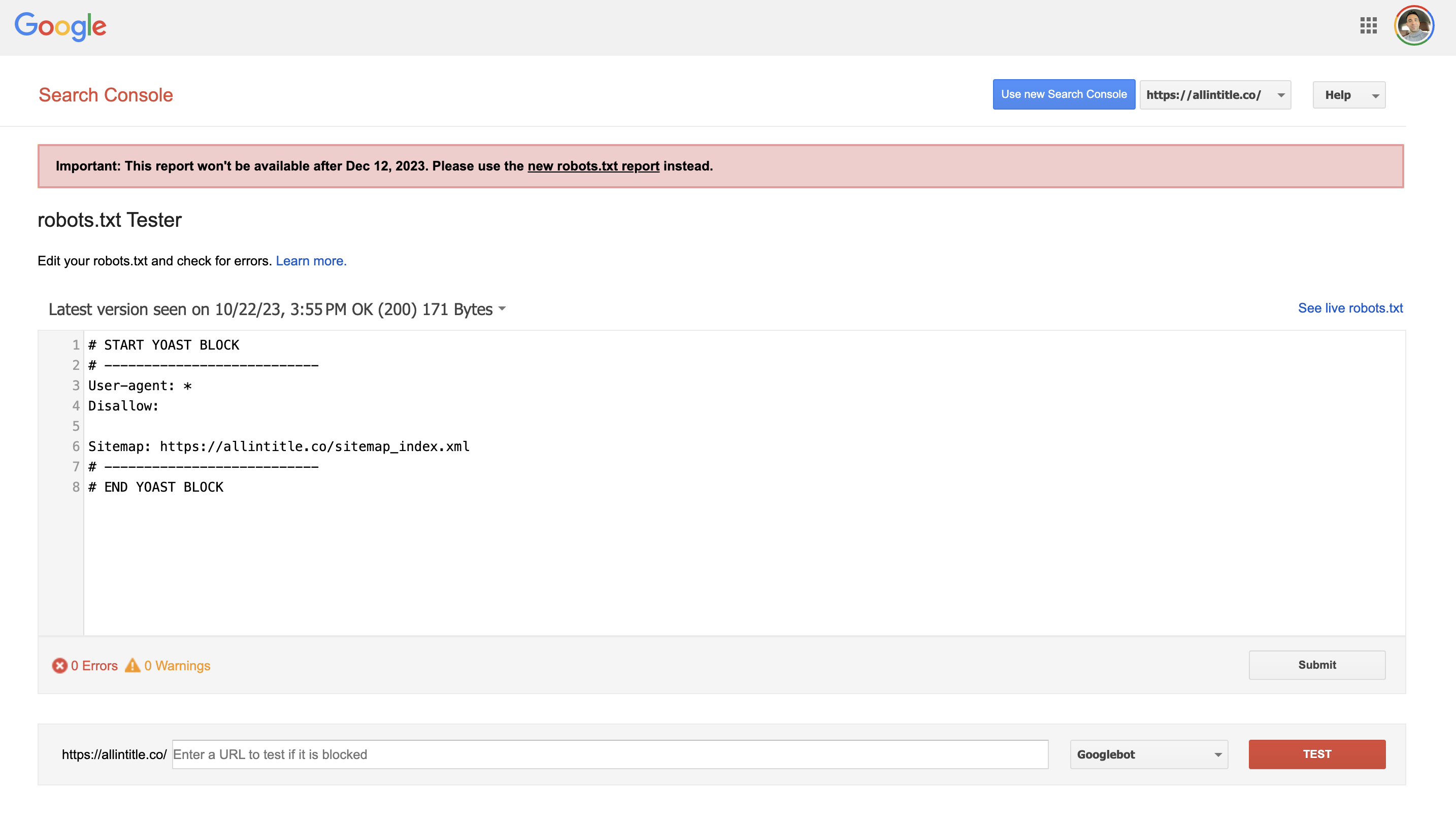

In a significant update, Google has recently enhanced its Search Console with a new robots.txt report feature. This development comes alongside the news that Google has decided to sunset its longstanding Robots.txt Tester tool. Renowned industry expert Barry Schwartz reported this change on November 15, 2023, marking a pivotal shift in how webmasters interact with Google's crawling process.

You can continue accessing Google's legacy robots.txt tester until December 12, 2023, by clicking on this link.

What's a Robots.txt file?

A robots.txt file is a simple text file located in the root directory of any website. It guides web crawlers about which pages or files they can or cannot request from your site, and how often they should crawl the site. This is primarily used to prevent your site from being overloaded with requests from various web crawlers and spiders; it is not a mechanism for keeping a web page out of Google. Key use cases include:

- Directing search engines or other web crawlers on which parts of your site should or should not be crawled.

- Preventing certain pages from being crawled to avoid overloading your site’s resources.

- Helping search engines prioritize which pages to crawl first.

- Discouraging crawlers from accessing sensitive areas of your site (though not a security measure).

- Assisting in SEO by preventing search engines from indexing duplicate pages or internal search results pages.

Robots.txt Syntax Example

Understanding the syntax of a robots.txt file is key to effectively managing how search engines or other web spiders interact with your site. Here's a basic example to illustrate common directives and their usage:

User-agent: * Disallow: /private/ Allow: /public/ Sitemap: http://www.example.com/sitemap.xml Crawl-delay: 10

In this example:

- The User-agent: * directive applies the following rules to all crawlers.

- Disallow: /private/ tells crawlers to avoid the /private/ directory.

- Allow: /public/ explicitly permits crawling of the /public/ directory.

- The Sitemap: directive points crawlers to the site's sitemap for efficient navigation.

- Crawl-delay: 10 asks crawlers to wait 10 seconds between requests, reducing server load.

This simple example provides a foundational understanding of how to structure a robots.txt file and the impact of each directive on crawler behavior. Below, we will provide a list of directives that can be included in a robots.txt file.

Common Directives

- User-agent: Targets specific search engines for crawling instructions.

- Disallow: Prevents search engines from indexing private or sensitive folders.

- Allow: Ensures important pages or directories are crawled within restricted areas.

- Sitemap: Helps search engines find and index all eligible pages on your site.

- Crawl-delay: Reduces server load by limiting the rate of crawling.

Less Common Directives

- Noindex: Indicates that a page should not be indexed (more effective as a meta tag).

- Host: Specifies the preferred domain for indexing (primarily recognized by Yandex).

- Noarchive: Prevents search engines from storing a cached copy of the page (more commonly used in meta tags).

- Nofollow: Instructs search engines not to follow links on the specified page (more effective in meta tags).

Common Web Crawlers

Below is a comprehensive list of the most famous web crawlers. These are the web crawlers that we have identified in many robots.txt files that we have analyzed; although we try to update this list with new crawlers from time to time, this list only represents the most frequent web crawlers and is by no means all-inclusive.

- Google-Extended: An additional Google crawler for specific and extended indexing tasks.

- ChatGPT-User: Represents a user interacting through OpenAI's conversational model, ChatGPT.

- TeleportBot: A virtual navigation bot used in applications requiring digital traversal of environments.

- anthropic-ai: A bot developed by Anthropic AI, focusing on advanced artificial intelligence interactions.

- omgilibot: A crawler for the search engine OMGILI, focusing on indexing forum and discussion content.

- WebCopier: A bot designed to download websites for offline viewing.

- WebStripper: Extracts content from websites for local use, often for data extraction.

- SiteSnagger: A tool for downloading complete websites for offline access.

- WebZIP: A bot that downloads websites for local browsing and archival purposes.

- GPTBot: A language model-based bot developed by OpenAI for conversational interactions.

- Googlebot: Google's main web crawler for indexing sites for Google Search.

- Bingbot: Microsoft's crawler for indexing content for Bing search results.

- Yandex Bot: A crawler for the Russian search engine Yandex.

- Apple Bot: Indexes webpages for Apple’s Siri and Spotlight Suggestions.

- DuckDuckBot: The web crawler for DuckDuckGo.

- Baiduspider: The primary crawler for Baidu, the leading Chinese search engine.

- Sogou Spider: A crawler for the Chinese search engine Sogou.

- Facebook External Hit: Indexes content shared on Facebook.

- Exabot: The web crawler for Exalead.

- Swiftbot: Swiftype's web crawler for custom search engines.

- Slurp Bot: Yahoo's crawler for indexing pages for Yahoo Search.

- CCBot: By Common Crawl, providing internet data for research.

- GoogleOther: A newer Google crawler for internal use.

- Google-InspectionTool: Used by Google's Search testing tools.

- MJ12bot: Majestic's web crawler.

- Pinterestbot: Pinterest's crawler for images and metadata.

- SemrushBot: For collecting SEO data by Semrush.

- Dotbot: Moz's crawler for SEO and technical issues.

- AhrefsBot: Ahrefs' bot for SEO audits and link building.

- Archive.org_bot: By the Internet Archive to save web page snapshots.

- Soso Spider: Crawler for the Soso search engine by Tencent.

- SortSite: A crawler for testing, monitoring, and auditing websites.

- Apache Nutch: An extensible and scalable open-source web crawler.

- Open Search Server: A Java web crawler for creating search engines or indexing content.

- Headless Chrome: A browser operated from the command line or server environment.

- Chrome-Lighthouse: A browser addon for auditing and performance metrics.

- Adbeat: A crawler for site and marketing audits.

- Comscore / Proximic: Used for online advertising.

- Bytespider: A crawler associated with search engines.

- PetalBot: A crawler for Petal Search.